TL:DR – A reprint of a feature article of mine on Dark Energy that was published in StarDate magazin in July 2007.

Forget the Large Hadron Collider (LHC), with its alleged ability to create earth-sucking microscopic black holes, its forthcoming efforts to simulate conditions a trillionth of a second after the Big Bang 100 metres beneath the Swiss countryside. There is a far bigger puzzle facing science that the LHC cannot answer: What is the mysterious energy that seems to be accelerating ancient supernovae at the farthest reaches of the universe?

Forget the Large Hadron Collider (LHC), with its alleged ability to create earth-sucking microscopic black holes, its forthcoming efforts to simulate conditions a trillionth of a second after the Big Bang 100 metres beneath the Swiss countryside. There is a far bigger puzzle facing science that the LHC cannot answer: What is the mysterious energy that seems to be accelerating ancient supernovae at the farthest reaches of the universe?

In the late 1990s, the universe changed. The sums suddenly did not add up. Observations of the remnants of stars that exploded billions of years ago, Type Ia supernovae, showed that not only are they getting further away as the universe expands but they are moving faster and faster. It is as if a mysterious invisible force works against gravity and pervades the cosmos accelerating the expansion of the universe. This force has become known as dark energy and although it apparently fills the universe, scientists have absolutely no idea what it is or where it comes from, several big research teams around the globe are working with astronomical technology that could help them find an answer.

Until type Ia supernovae appeared on the cosmological scene, scientists thought that the expansion of the universe following the Big Bang was slowing down. Type Ia supernovae are very distant objects, which means their light has taken billions of years to reach us. But, their brightness could be measured to a high degree of accuracy that they provide astronomers with a standard beacon with which the vast emptiness of space could be illuminated, figuratively speaking.

The supernovae data, obtained by the High-Z SN Search team and the Supernova Cosmology Project, rooted in Lawrence Berkeley National Laboratory, suggested that not only is the universe expanding, but that this expansion is accelerating. to make On the basis of the Type Ia supernovae, the rate of acceleration of expansion suggests that dark energy comprises around 73% the total energy of the universe, with dark matter representing 24% of the energy and all the planets, stars, galaxies, black holes, etc containing a mere 4%.

HETDEX, TEX STYLE

Professor Karl Gebhardt and Senior Research Scientists Dr Gary Hill and Dr Phillip McQueen and their colleagues running the Hobby Eberly Telescope Dark Energy Experiment (HETDEX) based at the McDonald Observatory in Texas are among the pioneers hoping to reveal the source and nature of dark energy. Those ancient supernovae are at a “look-back time” of 9 billion years, just two-thirds the universe’s age. HETDEX will look back much further to 10 -12 billion years.

HETDEX will not be looking for dark energy itself but its effects on how matter is distributed. “In the very early Universe, matter was spread out in peaks and troughs, like ripples on a pond, galaxies that later formed inherited that pattern,” Gebhardt explains. A detailed 3D map of the galaxies should reveal the pattern. “HETDEX uses the characteristic pattern of ripples as a fixed ruler that expands with the universe,” explains Senior Research Scientist Gary Hill. Measuring the distribution of galaxies uses this ruler to map out the positions of the galaxies, but this needs a lot of telescope time and a powerful new instrument. “Essentially we are just making a very big map [across some 15 billion cubic light years] of where the galaxies are and then analyzing that map to reveal the characteristic patterns,” Hill adds.

HETDEX will not be looking for dark energy itself but its effects on how matter is distributed. “In the very early Universe, matter was spread out in peaks and troughs, like ripples on a pond, galaxies that later formed inherited that pattern,” Gebhardt explains. A detailed 3D map of the galaxies should reveal the pattern. “HETDEX uses the characteristic pattern of ripples as a fixed ruler that expands with the universe,” explains Senior Research Scientist Gary Hill. Measuring the distribution of galaxies uses this ruler to map out the positions of the galaxies, but this needs a lot of telescope time and a powerful new instrument. “Essentially we are just making a very big map [across some 15 billion cubic light years] of where the galaxies are and then analyzing that map to reveal the characteristic patterns,” Hill adds.

“We’ve designed an upgrade that allows the HET to observe 30 times more sky at a time than it is currently able to do,” he says. HETDEX will produce much clearer images and work much better than previous instruments, says McQueen. Such a large field of view needs technology that can analyze the light from those distant galaxies very precisely. There will be 145 such detectors, known as spectrographs, which will simultaneously gather the light from tens of thousands of fibers. “When light from a galaxy falls on one of the fibers its position and distance are measured very accurately,” adds Hill.

The team has dubbed the suite of spectrographs VIRUS. “It is a very powerful and efficient instrument for this work,” adds Hill, “but is simplified by making many copies of the simple spectrograph. This replication greatly reduces costs and risk as well.”

McQueen adds that after designing VIRUS, the team has built a prototype of one of the 145 unit spectrographs. VIRUS-P is now operational on the Observatory’s Harlan J. Smith 2.7 m telescope, he told us, “We’re delighted with its performance, and it’s given us real confidence in this part of our experiment.”

VIRUS will make observations of 10,000 galaxies every night. So, after just 100 nights VIRUS will have mapped a million galaxies. “We need a powerful telescope to undertake the DEX survey as quickly as possible,” adds McQueen. Such a map will constrain the expansion of the universe very precisely. “Since dark energy only manifests itself in the expansion of the universe, HETDEX will measure the effect of dark energy to within one percent,” Gebhardt says. The map will allow the team to determine whether the presence of dark energy across the universe has had a constant effect or whether dark energy itself evolves over time.

“If dark energy’s contribution to the expansion of the universe has changed over time, we expect HETDEX to see the change [in its observations],” adds Gebhardt, “Such a result will have profound implications for the nature of dark energy, since it will be something significantly different than what Einstein proposed.”

SLOAN RANGER

Scientific scrutiny of the original results has been so intense that most cosmologists are convinced dark energy exists. “There was a big change in our understanding around 2003-2004 as a triangle of evidence emerged,” says Bob Nichol of the University of Portsmouth, England, who is working on several projects investigating dark energy.

First, the microwave background, the so-called afterglow of creation, showed that the geometry of the universe has a mathematically “flat” structure. Secondly, the data from the Type Ia supernovae measurements show that the expansion is accelerating. Thirdly, results from the Anglo-Australian 2dF redshift survey and then the Sloan Digital Sky Survey (SDSS) showed that on the large scale, the universe is lumpy with huge clusters of galaxies spread across the universe.

The SDSS carried out the biggest galaxy survey to date and confirmed gravity’s role in the expansion structures in the universe by looking at the ripples of the Big Bang across the cosmic ocean. “We are now seeing the corresponding cosmic ripples in the SDSS galaxy maps,” Daniel Eisenstein of the University of Arizona has said, “Seeing the same ripples in the early universe and the relatively nearby galaxies is smoking-gun evidence that the distribution of galaxies today grew via gravity.”

But why did an initially smooth universe become our lumpy cosmos of galaxies and galaxy clusters? An explanation of how this lumpiness arose might not only help explain the evolution of the early universe, but could shed new light on its continued evolution and its ultimate fate. SDSS project will provide new insights into the nature of dark energy’s materialistic counterpart, dark matter.

As with dark energy, dark matter is a mystery. Scientists believe it exists because without it the theories that explain our observations of how galaxies behave would not stack up. Dark matter is so important to these calculations, that a value for all the mass of the universe five times bigger than the sum of all the ordinary matter has to be added to the equations to make them work. While dark energy could explain the accelerating acceleration our expanding universe, the existence of dark matter could provide an explanation for how the lumpiness arose.

“In the early universe, the interaction between gravity and pressure caused a region of space with more ordinary matter than average to oscillate, sending out waves very much like the ripples in a pond when you throw in a pebble,” Nichol, who is part of the SDSS team, explains. “These ripples in matter grew for a million years until the universe cooled enough to freeze them in place. What we now see in the SDSS galaxy data is the imprint of these ripples billions of years later.”

Colleague Idit Zehavi now at Case Western University adds a different tone. Gravity’s signature could be likened to the resonance of a bell she suggests, “The last ring gets forever quieter and deeper in tone as the universe expands.” It is now so faint as to be detectable only by the most sensitive surveys. The SDSS has measured the tone of this last ring very accurately.”

“Comparing the measured value with that predicted by theory allows us to determine how fast the Universe is expanding,” explains Zehavi. This, as we have seen, depends on the amount of both dark matter and dark energy.

The triangle of evidence – microwave background, type Ia supernovae, and galactic large-scale structure – leads to only one possible conclusion: that there is not enough ordinary matter in the universe to make it behave in the way we observe and there is not enough normal energy to make it accelerate as it does. “The observations have forced us, unwillingly, into a corner,” says Nichol, “dark energy has to exist, but we do not yet know what it is.”

The next phase of SDSS research will be carried out by an international collaboration and sharpen the triangle still further along with the HETDEX results. “HETDEX adds greatly to the triangle of evidence for dark energy,” adds Hill, “because it measures large-scale structure at much greater look-back times between local measurements and the much older cosmic microwave background,” says Hill. As the results emerge, scientists might face the possibility that dark energy has changed over time or it may present evidence that requires modifications to the theory of gravity instead.

The Anglo-Australian team is also undertaking its own cosmic ripple experiment, Wiggle-Z. “This program is measuring the size of ripples in the Universe when the Universe was about 7 billion years old,” Brian Schmidt at Australian National University says. Schmidt was leader of the High-Z supernovae team that found the first accelerating evidence. SDSS and 2dF covered 1-2 billion years ago and HETDEX will measure ripples at 10 billion years. “Together they provide the best possible measure of what the Universe has been doing over the past several years,” Schmidt muses.

INTERNATIONAL SURVEY

The Dark Energy Survey, another international collaboration, will make any photographer green with envy, but thankful they don’t have to carry it with them. The Fermilab team plans to build an extremely sensitive 500 Megapixel camera, with a 1 meter diameter and a 2.2 degree field of view that can grab those millions of pixels within seconds.

The camera itself will be mounted in a cage at the prime focus of the Blanco 4-meter telescope at Cerro Tololo Inter-American Observatory, a southern hemisphere telescope owned and operated by the National Optical Astronomy Observatory (NOAO). This instrument, while being available to the wider astronomical community, will provide the team with the necessary power to conduct a large scale sky survey.

Over five years, DES will use almost a third of the available telescope time to carry out its wide survey. The team hopes to achieve exceptional precision in measuring the properties of dark energy using counts of galaxy clusters, supernovae, large-scale galaxy clustering, and measurements of how light from distant objects is bent by the gravity of closer objects between it and the earth. By probing dark energy using four different methods, the Dark Energy Survey will also double check for errors, according to team member Joshua Frieman.

WFMOS

According to Nichol, “The discovery of dark energy is very exciting because it has rocked the whole of science to its foundations.” Nichol is part of the WFMOS (wide field multi-object spectrograph) team hoping to build an array of spectrographs for the Subaru telescopes. These spectrographs will make observations of millions of galaxies across an enormous volume of space at a distances equivalent to almost two thirds the age of the universe. “Our results will sit between the very accurate HETDEX measurements and the next generation SDSS results coming in the next five years,” he explains, “All the techniques are complimentary to one another, and will ultimately help us understand dark energy.”

DESTINY’S CHILD

If earth-based studies have begun to reveal the secrets of dark energy, then three projects vying for attention could take the experiments off-planet to get a slightly closer look. The projects all hope to look at supernovae and the large-scale spread of matter. They will be less error prone than any single technique and so provide definitive results.

SNAP, SuperNova/Acceleration Probe, is led by Saul Perlmutter of Lawrence Berkeley National Laboratory in Berkeley, California, one of the original supernova explorers. SNAP will observe light from thousands of Type Ia supernovae in the visible and infra-red regions of the spectrum as well as look at how that light is distorted by massive objects in between the supernovae and the earth.

Adept, Advanced Dark Energy Physics Telescope, is led by Charles Bennett of Johns Hopkins University in Baltimore, Maryland. This mission will also look at near-infrared light from 100 million galaxies and a thousand Type Ia supernovae. It will look for those cosmic ripples and so map out the positions of millions of galaxies. This information will allow scientists to track how the universe has changed over billions of years and the role played by dark energy.

Destiny, Dark Energy Space Telescope, led by Tod Lauer of the National Optical Astronomy Observatory, based in Tucson, Arizona, will detect and observe more than 3000 supernovae over a two-year mission and then survey a vast region of space looking at the lumpiness of the universe.

LIGHTS OUT ON DARK ENERGY

So, what is dark energy? “At this point it is pure speculation,” answers Hill, “The observations are currently too poor, so we are focusing on making the most accurate measurements possible.” Many scientists are rather embarrassed but equally excited by the thought that we understand only a tiny fraction of the universe. Understanding dark matter and dark energy is one of the most exciting quests in science. “Right now, we have no idea where it will lead, adds Hill.

“Despite some lingering doubts, it looks like we are stuck with the accelerating universe,” says Schmidt. “The observations from supernovae, large-scale structure, and the cosmic microwave background look watertight,” he says. He too concedes that science is left guessing. The simplest solution is that dark energy was formed along with the universe. The heretical solution would mean modifying Einstein’s theory of General Relativity, which has so far been a perfect predictor of nature. “Theories abound,” Schmidt adds, “whatever the solution, it is exciting, but a very, very hard problem to solve.”

This David Bradley special feature article originally appeared on Sciencebase last summer, having been published in print in StarDate magazine – 2007-07-01-21:12:X1

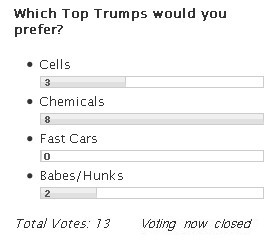

Something a little different today. A tale of family playtime, a poll, and a competition to win prizes from the RSC and the CentreoftheCell.org.

Something a little different today. A tale of family playtime, a poll, and a competition to win prizes from the RSC and the CentreoftheCell.org.

Ultimately, the only truly safe sex is that practised alone or not practiced at all, oh, and perhaps cybersex. However, that said, even these have issues associated with eyesight compromise (allegedly), repetitive strain injury (RSI) and even electrocution in extreme cases of online interactions (you could spill your Mountain Dew on your laptop, after all). And, of course, there are popups, Trojans, packet sniffers and viruses and worms to consider…

Ultimately, the only truly safe sex is that practised alone or not practiced at all, oh, and perhaps cybersex. However, that said, even these have issues associated with eyesight compromise (allegedly), repetitive strain injury (RSI) and even electrocution in extreme cases of online interactions (you could spill your Mountain Dew on your laptop, after all). And, of course, there are popups, Trojans, packet sniffers and viruses and worms to consider… Forget the

Forget the  HETDEX will not be looking for dark energy itself but its effects on how matter is distributed. “In the very early Universe, matter was spread out in peaks and troughs, like ripples on a pond, galaxies that later formed inherited that pattern,” Gebhardt explains. A detailed 3D map of the galaxies should reveal the pattern. “HETDEX uses the characteristic pattern of ripples as a fixed ruler that expands with the universe,” explains Senior Research Scientist Gary Hill. Measuring the distribution of galaxies uses this ruler to map out the positions of the galaxies, but this needs a lot of telescope time and a powerful new instrument. “Essentially we are just making a very big map [across some 15 billion cubic light years] of where the galaxies are and then analyzing that map to reveal the characteristic patterns,” Hill adds.

HETDEX will not be looking for dark energy itself but its effects on how matter is distributed. “In the very early Universe, matter was spread out in peaks and troughs, like ripples on a pond, galaxies that later formed inherited that pattern,” Gebhardt explains. A detailed 3D map of the galaxies should reveal the pattern. “HETDEX uses the characteristic pattern of ripples as a fixed ruler that expands with the universe,” explains Senior Research Scientist Gary Hill. Measuring the distribution of galaxies uses this ruler to map out the positions of the galaxies, but this needs a lot of telescope time and a powerful new instrument. “Essentially we are just making a very big map [across some 15 billion cubic light years] of where the galaxies are and then analyzing that map to reveal the characteristic patterns,” Hill adds.

Years ago when BioMedNet’s HMSBeagle was still sailing the high seas, I wrote a feature for the Adapt or Die careers column on

Years ago when BioMedNet’s HMSBeagle was still sailing the high seas, I wrote a feature for the Adapt or Die careers column on  First off, just to say thanks to everyone who made

First off, just to say thanks to everyone who made  It’s that time of the month again, so here’s the latest round-up from my column over on SpectroscopyNOW, covering a whole range of science and medical news with a spectral twist from magnetic resonance to Raman by way of fishnets and infra-red.

It’s that time of the month again, so here’s the latest round-up from my column over on SpectroscopyNOW, covering a whole range of science and medical news with a spectral twist from magnetic resonance to Raman by way of fishnets and infra-red. A discussion a while back, over

A discussion a while back, over  First up in The Alchemist this week is a tale of reactions where size really does matter! News of why “non-smokers cough” emerges from the American Chemical Society meeting this month and a new physical process has been revealed by NMR spectroscopy of frozen xenon atoms that could provide a chaotic link in quantum mechanics back to Newton’s era. Biotech news hints at a novel way to flavour your food and Japanese chemists have made a gel that undulates like intestinal muscle. Finally, this week’s award goes to my good friend AP de Silva of Queen’s University Belfast for his highly intelligent work in the development of market-leading sensor technology and intelligent molecules.

First up in The Alchemist this week is a tale of reactions where size really does matter! News of why “non-smokers cough” emerges from the American Chemical Society meeting this month and a new physical process has been revealed by NMR spectroscopy of frozen xenon atoms that could provide a chaotic link in quantum mechanics back to Newton’s era. Biotech news hints at a novel way to flavour your food and Japanese chemists have made a gel that undulates like intestinal muscle. Finally, this week’s award goes to my good friend AP de Silva of Queen’s University Belfast for his highly intelligent work in the development of market-leading sensor technology and intelligent molecules. For Scousers, Londoners, fans of BBC’s Have I Got News for You satirical news quiz, and especially to everyone who watched this Beijing to London Olympic handover this week the name Boris Johnson likely drums up an image of some blonde, floppy haired, bedraggled and totally confused Tory toff, who just happens to be Mayor of London.

For Scousers, Londoners, fans of BBC’s Have I Got News for You satirical news quiz, and especially to everyone who watched this Beijing to London Olympic handover this week the name Boris Johnson likely drums up an image of some blonde, floppy haired, bedraggled and totally confused Tory toff, who just happens to be Mayor of London.