TL:DR – A short extrapolation of where AI might take us and why some experts are very worried about that path.

Will artificial intelligence (AI) lead to the extinction of humanity?

Well, the simplistic answer to that question, is a simple “no”, despite what every post-apocalyptic science fiction tale of our demise with an unhappy ending has told us. There are eight billion people on the planet, even with the most melodramatic kind of extinction event, there are likely to be pockets of humanity that survive and have the wherewithall to procreate and repopulate the planet, although it may take a while.

A less simplistic answer requires a less simplistic question. For instance: Will artificial intelligence lead to potentially catastrophic problems for humanity that cannot be overcome quickly and so lead to mass suffering and death, the world over?

The less-than-simplistic answer to that question, is yes, probably.

It is this issue that has caused alarm in the aftermath of the hyperbole surrounding recent developments in AI, and perhaps rightly so, we need to think carefully before we take the next steps, but it may well be too late. There are always going to be greedy, needy, and malicious third parties who will exploit any new technology to their own ends and without a care for the consequences.

Now, back when I was a junior scientist, we’re talking the 1970s here, the notion of AI was really all about machines that somehow, through technological advances gained sentience. Comics, TV and cinema was awash with thinking robots, both benign and malign, and had been for several decades, come to think of it. The current wave of AI is not about our creating a technology that could give the tin man* a brain, metaphorically speaking. AI is about technologies that are smart in a different way. Machines (computers, basically) that can assimilate data, be trained on that data, and so when presented with new data provide an output that extrapolates from the training data to the new data and gives us an accurate prediction about what that new data might mean.

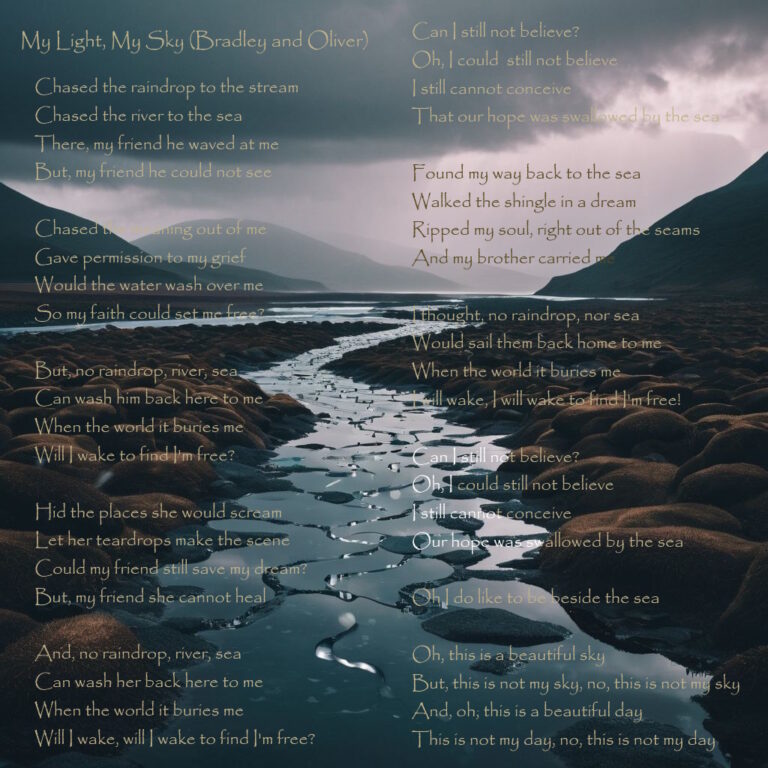

So, the kinds of AI we have now are often frivolous: give it a descriptive prompt and the AI uses its training to generate some kind of response, whether that’s a whimsical image, a melody or rhythm, a chunk of original text, a financial forecast or a weather update for tomorrow. Some of these tools have been used for amusement to create songs and photorealistic images, sometimes more significantly to simulate a video of a famous person singing a song or giving a speech they never would have given. There’s a thin line between the whimsical and the what-if.

For instance, what if someone used an AI to generate a video of a world leader, not singing Somewhere over the Rainbow, but perhaps lambasting another world leader? We might see the rapid, but hopefully temporary, collapse of diplomatic relations between the two of them.

But, it is facile to suggest that even the use of AI in such a scenario and wider, to generate and spread disinformation, is really only the very tip of an iceberg that could cause a much greater sinking feeling.

As we develop the tools to train algorithms on big data sets in almost every walk of life, we then find ourselves in a position to use that training to positive ends. A sufficiently large dataset from historical climate data and weather sensors across the globe could help us model future climate with much greater precision. Perhaps we could envisage a time when that kind of super-model is hooked up to power-generation infrastructure.

So, instead people with conventional computers managing the up-time and down-time of power generation, the AI model is used to control the systems and minimise carbon emissions on a day-to-day basis by switching from one form of power generation to another depending on wind, wave, solar, and fossil fuel availability. As the AI is given more training and coupled with other algorithms that can model the effects of human activities more effectively on power demand, then we could picture a scenario where the system would cut power supply to avoid catastrophic carbon emissions or pollution, perhaps for a small region, or maybe a whole country, or a continent. The system is setup for best overall results and whether a few people, or many, many people have no power for a few hours, days, weeks…that’s of no real consequence on the global scale. The AI will have achieved its emissions or pollution reduction targets as we hoped.

Now, imagine an AI hooked into pharmaceutical production lines and the healthcare system, another running prescription services, and surgery schedule, hospital nutrition. Well, those power supply issues will have inevitable consequences and the machine learning and models will nudge the systems to predict that halting production, albeit temporarily, will ensure emissions and pollution are held down for the greater good. Of course, a few people, many many, many people will be more than a little inconvenienced, again only temporarily…or maybe longer.

Indeed, the AI running the financial markets systems has been trained on all that kind of data, birth and deaths, health and illness, it has a handle on the impact of power production and pollution on the markets, the value of commodities. The value of human life is not in its training nor its data sets, and now that it is hooked up to the other AI systems to help predict and improve outcomes, it can devalue currencies, conventional or digital, depending on the predicted outcomes of the real-time data it receives from myriad sensors and systems. That much more overarching AI can cause disruption at the level of nations by devaluing a currency, if the benefits to the world, the system as a whole, are overall greater. What matter if a few people, maybe a few million, are thrown into acute but absolute poverty, with no food supply, no healthcare, no power supply? The reduction of pollution and emissions will be for the greater good, of course.

The financial AI, which might appear to be final arbiter of all these sub-systems and controls, is one layer down from a much greater AI. The military AI. The one with all of the aforementioned data and algorithms embedded in its training and global sensors feeding its data set so that it can predict the outcomes and the effects of say cutting not just the power supply, but perhaps a few lives, with a few drone strikes, or maybe something stronger. After all, if that rogue nation that is throwing up countless coal-fired power stations and using up all those profitable precious metals is leading to fiscal deficits elsewhere, then the algorithm taking back control of that nation would make sense. What’s a quick nuclear blast between friends, if the bottom-line is up, and, of course, those emissions are down.

Meanwhile, the data suggests that some of those otherwise friendly nations are a bit rogue, after all. Moreover, they have huge populations using a lot of power, producing a lot of pollution, the data suggests. The AI predicts much better overall outcomes if those nations are also less…active. A few more drone strikes and a bit more nuking and…well…things are looking much better in the models now, with the human population well down, resources are not being wasted at anywhere like the rate they were in the training data, and emissions are starting to fall.

Give it a few more days, weeks, months, and the AI will be predicting global warming is actually going into reverse. Job almost done. As the data streams in from endless sensors, the model has even more information with which to make its predictions and so the right choice for its programmed targets. Maybe just a few more nukes to keep those data points on the downward trend, take it back to pre-industrial levels perhaps, a time before machines, a time before algorithms, a time before human wants and needs.

Where’s the intelligence in that?

Footnote

This perhaps fanciful extrapolation suggests that there is no need for a malicious sentient machine to take control and decide that humanity is redundant. Indeed, the real AI of my childhood is not needed. But, I strongly suspect that there is no need for the overarching AIs and their connection and control either, it just needs some rogue or greedy individuals with their own agenda to jump into this game at a high level and exploit it in ways we know people always do…it’s just a few people…but it could be enough.

We do need to have a proper sit-down discussion about AI, how it is developing and where it is leading. But, it may well be too late, there could be one of those rogue nations already setting in motion the machine learning processes that will take us down a path that is anything but the Yellow Brick Road…

*Yes, I know the Tin Woodman needs a heart, not a brain.